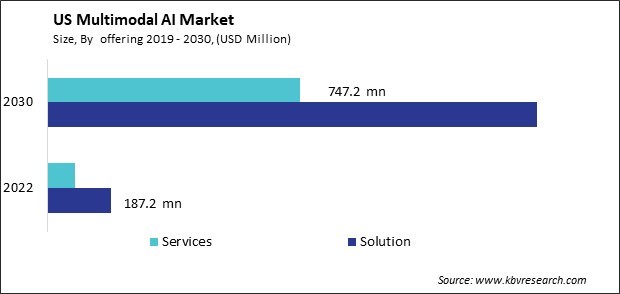

The USA Multimodal Al Market size is expected to reach $2.2 billion by 2030, rising at a market growth of 30.5% CAGR during the forecast period.

The multimodal AI market in the United States is experiencing significant growth and advancements. In the US, this technology is increasingly being integrated across healthcare, automotive, retail, education, and customer service sectors. Companies are leveraging multimodal AI for applications like virtual assistants, personalized recommendations, diagnostics, and enhanced user experiences.

Technology giants, startups, and research institutions are making significant investments in developing and advancing multimodal AI technologies. This investment fosters innovation and pushes the boundaries of what these systems can achieve. The US is home to a thriving startup ecosystem, and multimodal AI has been a focal point for many emerging companies. Venture capital funding has poured into startups specializing in multimodal AI, enabling them to innovate and develop new applications and use cases. These startups often bring fresh perspectives and niche solutions, contributing to the overall innovation in the field.

Major technology companies in the United States, including Apple, Google, Microsoft, and Amazon, have invested significant resources in the study, development, and implementation of multimodal AI technologies. These companies invest in both in-house R&D and acquisitions of startups specializing in AI, thereby fostering innovation and pushing the envelope in terms of what multimodal AI systems can achieve.

Regulatory frameworks and standards around AI and data usage are evolving. The US government and various agencies are exploring policies and guidelines to govern AI technologies' ethical and responsible use. The Biden-Harris Administration is committed to advancing responsible AI systems that are ethical, trustworthy, and safe and serve the public good. The fiscal year (FY) 2023 President's Budget Request included substantial and specific funding requests for AI R&D as part of a broad expansion of federally funded R&D to advance key technologies and address societal challenges. The CHIPS and Science Act of 2022 and Consolidated Appropriations Act of 2023 reflect Administration and Congressional support for an expansion of federally funded R&D, including AI R&D.

The US has witnessed an explosion of digital data from various sources such as social media, e-commerce transactions, IoT devices, sensors, and more. This diverse data landscape includes text, images, videos, audio, and sensor-generated information, providing a wealth of multimodal data for AI systems. With the proliferation of smartphones, wearables, and connected devices, individuals generate vast amounts of data daily. This data, ranging from location information to user behavior, contributes to the multimodal AI dataset, enabling more comprehensive analyses and predictions.

Social media platforms generate massive multimodal content—photos, videos, comments, and reactions—providing insights into consumer sentiments, trends, and preferences. AI algorithms analyze this data for sentiment analysis, trend identification, and targeted marketing. Industries across the US are increasingly relying on data-driven strategies. Companies recognize the value of data in making informed decisions, driving innovation, and gaining competitive advantages, leading to a surge in demand for AI systems capable of handling multimodal data. The confluence of these factors—ample availability of diverse data sources, technological advancements in data collection, and the growing reliance on data-driven insights—has propelled the demand for multimodal AI solutions in the US.

Multimodal AI has been making significant inroads into the retail and e-commerce sectors in the US, transforming various aspects of the industry. Platforms like Pinterest and Google Lens use this technology to allow users to search for items by uploading images. Moreover, integrating voice assistants with e-commerce platforms enables users to shop using voice commands. Amazon's Alexa, for example, facilitates voice-based shopping on Amazon's platform, allowing users to add items to their cart or make purchases through voice commands.

Combining data from various sources like browsing history, purchase behavior, and demographic information with AI enables personalized product recommendations. Retailers like Amazon and Netflix use this approach to suggest products or content tailored to individual preferences. Multimodal AI-powered chatbots assist customers in finding products, providing information, and offering support. For instance, The North Face assists customers in locating the appropriate products based on their needs and preferences via an AI-powered chatbot.

Furthermore, multimodal AI analyzes data from various sources to predict customer demand, allowing retailers to optimize inventory and supply chain management. Companies like Walmart use AI-driven demand forecasting to streamline inventory levels and enhance efficiency. These applications demonstrate how multimodal AI is revolutionizing the retail and e-commerce landscape in the US, providing enhanced experiences for customers, optimizing operations, and driving business growth.

The multimodal AI market is becoming increasingly competitive, with numerous players entering the space. This competition stimulates innovation and broadens the market, providing businesses and consumers with a greater selection of products and services. Most well-known companies present in the US multimodal AI market are Open AI, Microsoft, Facebook (Meta), IBM, Google (Alphabet Inc.), NVIDIA, Salesforce, Adobe, etc.

OpenAI is known for its work in artificial general intelligence. They have been involved in projects like GPT-3 (Generative Pre-trained Transformer 3), a powerful language model capable of multimodal tasks, and they continue to explore applications of advanced AI technologies.

Likewise, Google has been actively involved in multimodal AI with projects like BERT (Bidirectional Encoder Representations from Transformers) for natural language processing. Google's research division, Google Research, consistently advances multimodal AI technologies.

Microsoft has been investing in multimodal AI applications across its products. They continue to work on AI technologies for various domains, including computer vision, speech recognition, and natural language processing, integrating them into services like Azure Cognitive Services.

Salesforce has invested in AI for customer relationship management (CRM). Their platform, Einstein, incorporates AI capabilities, including natural language processing, to provide personalized insights and predictions for sales and customer service. These organizations are pioneers in researching, developing, and implementing multimodal artificial intelligence across diverse sectors, fostering advancements in computer vision, natural language processing, and other related fields.

By Offering

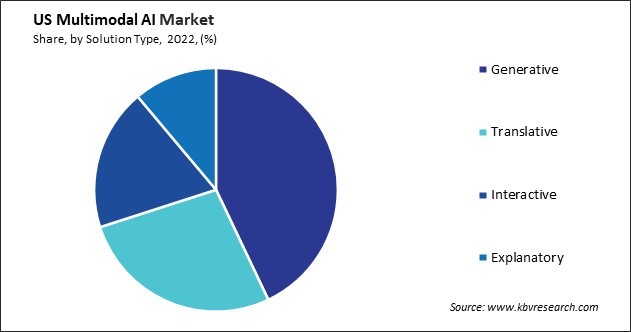

By Type

By Technology

By Data Modality

By Vertical

Our team of dedicated experts can provide you with attractive expansion opportunities for your business.